Kristi Noem's sacking exposes first fissures in Trump's second term

9NewsDonald Trump's sacking of the public face of his immigration crackdown has exposed the first fissures in the cabinet of his second term.

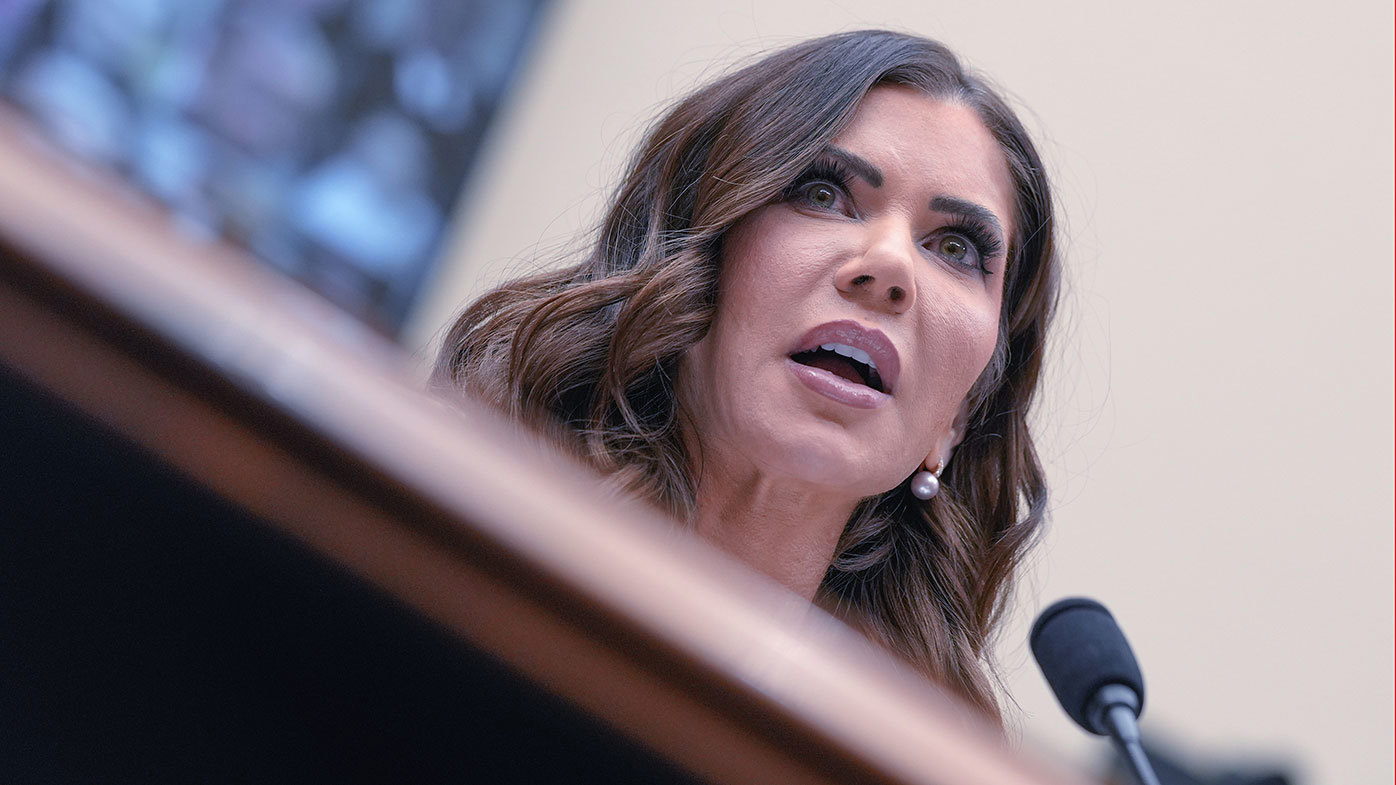

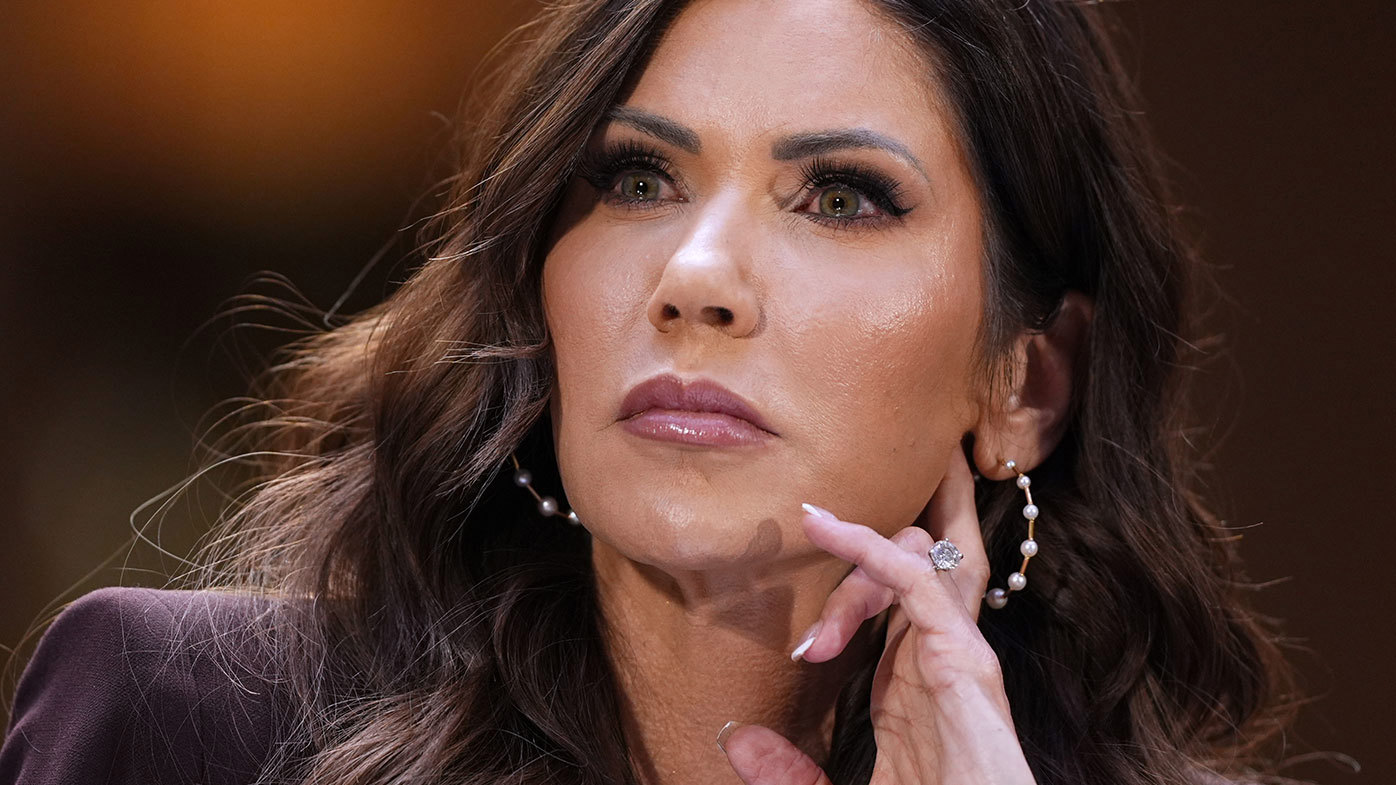

Kristi Noem is the first cabinet member to be fired in the president's second term, lasting more than a year in the role.

It's a stark contrast from Trump 1.0, where the sacking of a cabinet member felt like an almost weekly occurrence.

READ MORE: Could this be the 'ultimate constraint' for Trump amid war with Iran?

But in his second term, the president has surrounded himself with those less likely to draw his ire.

His first cabinet was filled with establishment Republicans, technocrats and respected business figures.

But those officials were likely to behave like conventional cabinet members, pushing to maintain precedent and meekly accepting criticism.

His new cabinet is more in the Trump mould: true believers in the president's agenda with an aggressive attitude towards the media and Democratic politicians.

They would be expected to follow the Trump ethos – never admit fault, and never apologise.

But the Noem's handling of the Department of Homeland Security was too much for even Trump to defend.

READ MORE: Marine veteran's arm broken in altercation with US senator

Being maligned and despised by Democrats might not make the president raise an eyebrow - but Republicans are another matter altogether.

Appearing before a Senate hearing this week, Noem was sharply criticised by Republicans Thom Tillis and John Kennedy.

Her handling of the immigration crackdown across America has been highly unpopular and dogged by serious errors.

Not only have thousands of documented immigrants with no criminal background been detained and deported, but many American citizens have also been swept up in the raids.

Noem has also been the subject of unflattering headlines about her own leadership.

A Coast Guard pilot was fired for leaving Noem's blanket on a plane.

She also used $US220 million ($313 million) in taxpayer dollars on an ad ostensibly to promote the Department, but was seen as being self-serving.

The ad showed Noem riding a horse wearing a cowboy hat in her home state of South Dakota.

The ad contract was given without a competitive bidding process to the husband of prominent department spokesperson Tricia McLaughlin.

And this week, she would not outright deny under oath that she was having an affair with top advisor Corey Lewandowski.

In spite of the scandals, Noem has been given a newly created role as "Special Envoy for The Shield of the Americas".

READ MORE: Trump's past comments on Iran come back to haunt him

It is not known whether Noem knew about her sacking before it was announced, with her taking the stage for a speech at the time.

Noem's axing is the first major sign Trump is trying to get his administration back on track as his approval rating sits in the doldrums.

Republicans are likely to lose control of the House of Representatives and possibly the Senate in the coming midterm elections in November.

If successful, Democrats will be able to halt Trump's legislative agenda and potentially impeach him.

Already, it is appearing apparent Democratic voters are more motivated to turn out in November.

On Tuesday, more Democrats voted in Texas primary elections than Republicans, an alarming sign for the White House in their party's most important state.

Noem's nominated replacement is Markwayne Mullin, an Oklahoma senator who has been vocal in his support of Trump's war on Iran this week.

READ MORE: Furious Republican senator threatens to bring Trump agenda to a halt

- Download the 9NEWS App here via Apple and Google Play

- Make 9News your preferred source on Google by ticking this box here

- Sign up to our breaking newsletter here

NEVER MISS A STORY: Get your breaking news and exclusive stories first by following us across all platforms.